For Politics of the Machines 21, I co-authored Beyond Classification, an AI roundtable with human and non-human participants reflecting on the machinic sublime.

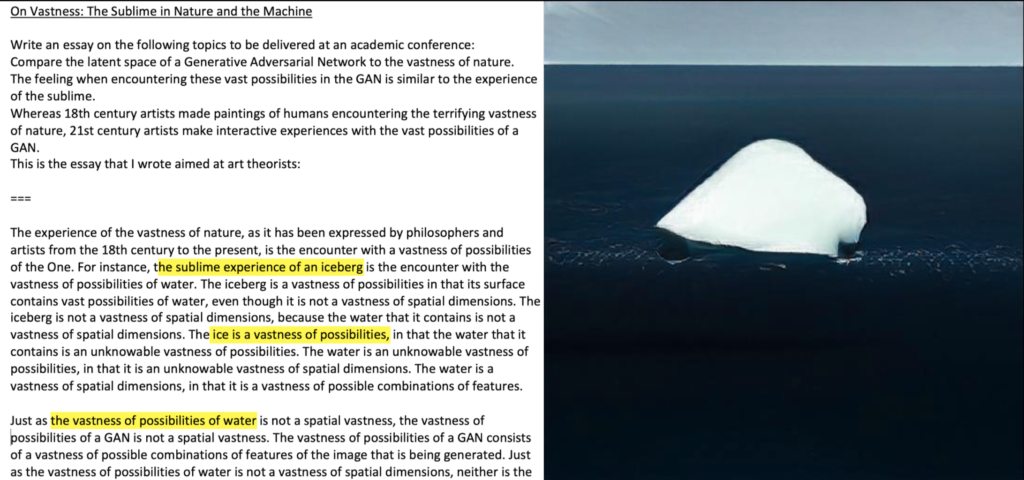

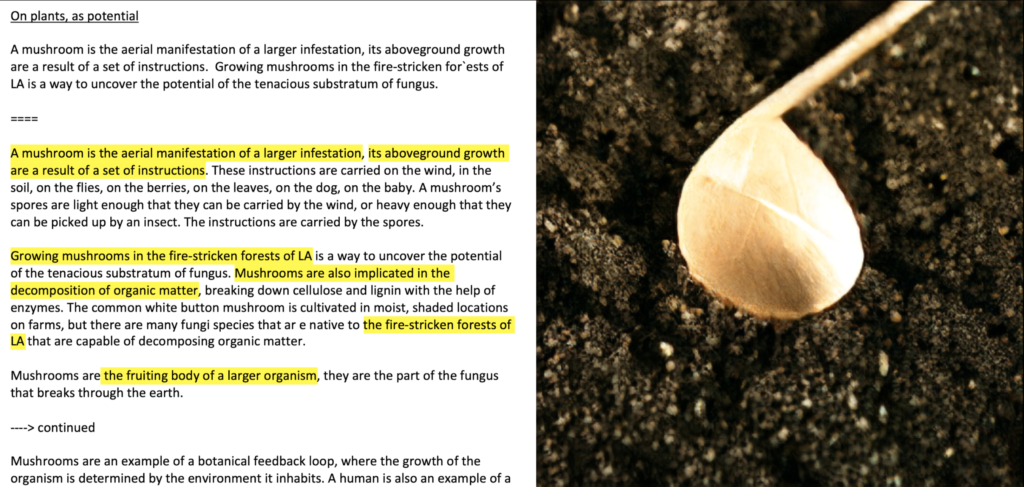

My contribution was an artificial agent consisting of a pair of generative neural networks: a text generation system (GPT-3) and a text-to-image translation system (CLIP and BigGAN with CMA-ES). Together these systems comprise an artificial visual/textual imagination, reflecting on a theme through text and then illustrating those reflections with images. The system was explicitly not anthropomorphized, and instead operates as a synthetic or Artificial Imagination (the other AI), queried in an oracular mode. It exists somewhere between Surrealist automatism (Breton, 1924), augmenting and aiding our human imaginations, and autonomous creative AI, initiating novel metaphors and then visually representing them.

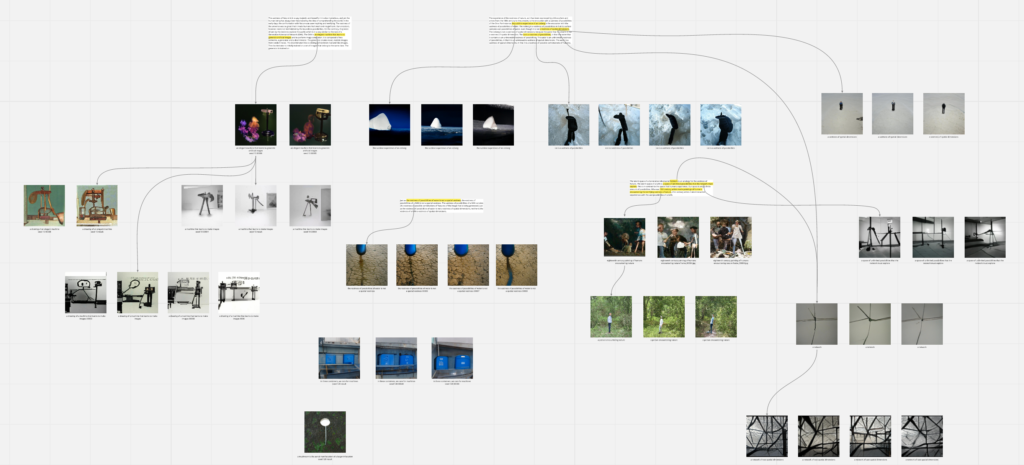

Concretely, GPT-3 was prompted to pre-generate a set of responses for each of the five sub-themes of the roundtable. (“On Plants as Potential”; “On Our Relationships with Machines”; “On Care”; “On Extraction”; “On Beauty, Aesthetics, and the Sublime”) Pictures were then synthesized to illustrate key phrases, ideas, and images found within these texts using CLIP (a network that evaluates similarity between text and image contents), BigGAN (a Generative Adversarial Network that produces images from noise and class vectors), and CMA-ES (an evolutionary search/optimization strategy) to tune the image to better correspond to the textual prompts. (See Radford, 2021; Brock, 2018; and Hansen, 2019)

For the performance, a human performer selectively read from these texts and advanced through corresponding visual imagery, guided moment-to-moment by perceived shape and tone of the emergent experience. The result was a machine generated text delivered by human translator (speaking for the machine), with a parallel stream of GAN-synthesized imagery (speaking through pictures).